In December 2022, Open AI introduced ChatGPT to the public and ultimately ushered us in to the new era of technological, economical and societal paradigm. While neural networks and LLM (Large Language Model) are not new and when it comes to academia and research, they have been around for decades, the introduction of ChatGPT and now GPT-4 to the public took everyone outside of those areas by surprise. And actually, even the creators themselves are being surprised everyday by the capabilities of this new technology.

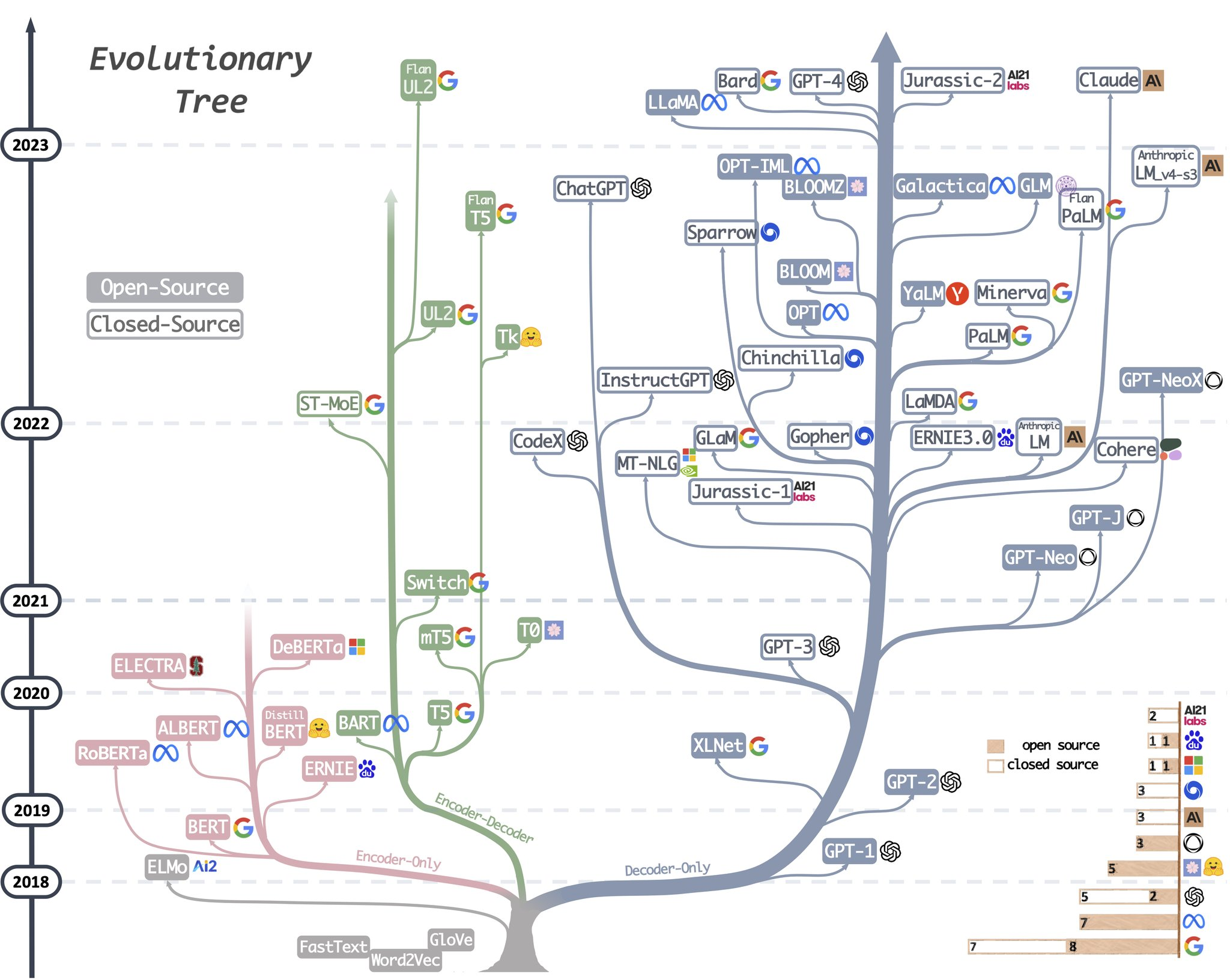

Before we dive in more detail on LLMs and ChatGPT specifically, I would like to summarize the current AI trends and situation in general.

We live in a time of social media, of short dramatic episodes and their usually unreasonable hyperboles. When it comes to AI, it is becoming very clear that while there is a lot of sensational news and click baits, overestimates of the AI etc., we are all entering an unknown territory. The growth is exponential, technological advancement is always speeding up and one thing Stephen Hawking warned us about was creating a self-developing AI – which is basically what the majority of people interested in AI is doing right now.

Terms like “AGI” and “Singularity” suddenly shifted from science fiction to reality. Ray Kurzweil has been predicting singularity in 2030 for decades while other researchers and scientists either assumed it will take much longer or that it is not possible to achieve at all. Well, each decade and especially in the last few years, the general consensus in the scientific community is getting much closer to Kurzweil’s original prediction.

I just watched this TED talk from Ray Kurzweil himself, recorded 8 years ago. Not only did he predict with 100% accuracy the ChatGPT, Bing and Bard technology we are just now experiencing, he also predicted the advancement of nanobots which we can implement into our bodies for various purposes, one of them being the ability of these nanobots to connect to the cloud and use additional computational resources and information other then the brain currently has. In other words, your mind would be directly connected to other sources of information and computation so not only you can know more, you can think faster.

Ultimately this could lead to the possibility of detaching our bodies from our minds, thus achieving immortality and this is what is called Singularity. So while prediction on LLM technology was 100% accurate, I cannot judge how far we are with nanobots, but the progress is clear and the path suggested by Ray Kurzweil years ago seems to be entangled with our daily lives more and more each day.

AGI stands for Artificial General Intelligence. Personally I heard this term for the first time inDecember while listening to Sam Altman (Open AI CEO) during an interview, fortunately it is a bit easier to grasp than the singularity mentioned above.

As it stands, currently we are having narrow AI systems – intelligences that can operate within a specific domain or with specific tools, for example AI that can identify cats in images or recommend movies in whatever online streaming service you are using. And these have been evolving rapidly over the last years, but they are still narrow systems in principle. AGI is then supposed to be something that surpasses specific domain, therefore being general intelligence, the all knowing entity that understands everything about society, the world and the universe. And we are on that path as well, especially now with the current LLM technology.

AGI stands for Artificial General Intelligence. Personally I heard this term for the first time inDecember while listening to Sam Altman (Open AI CEO) during an interview, fortunately it is a bit easier to grasp than the singularity mentioned above.

As it stands, currently we are having narrow AI systems – intelligences that can operate within a specific domain or with specific tools, for example AI that can identify cats in images or recommend movies in whatever online streaming service you are using. And these have been evolving rapidly over the last years, but they are still narrow systems in principle. AGI is then supposed to be something that surpasses specific domain, therefore being general intelligence, the all knowing entity that understands everything about society, the world and the universe. And we are on that path as well, especially now with the current LLM technology.

Let’s move from the philosophy and hyperboles to a more practical overview of where the current artificial intelligence stands, what are the real possibilities right now and what tools are available.

The first one is a highly realistic deep fake video of Tom Cruise, which surfaced around two or three years ago. In this case, the developers managed to create a photo-realistic digital avatar of Tom Cruise using his images without his involvement. The avatar was seamlessly integrated into a video, following a scripted dialogue. This example demonstrates the capability to create digital representations of any person and manipulate them to perform any desired action.Although it initially required significant time and resources to recreate Tom Cruise, advancements in the technology over the past few years have made it possible to create convincing digital replicas with much fewer resources and much faster speed.

Another example is the Meta Human real-time model animation. This demonstration occurred a few months ago and was presented by the creators of the Unreal Engine, commonly used in video games. During the presentation, an actress recorded a brief 10-second video on a mobile phone, displaying various emotions. This video was then fed into an AI software, which generated a digital replica of the actress on the screen. The digital avatar flawlessly replicated the actress’s movements and expressions. Additionally, the technology allowed for the transfer of facial expressions to another digital model, resulting in a realistic and convincing representation of a person who never actually performed those actions.

Further more, there are now AI systems capable of adjusting a person’s appearance in real time during a livestream. While photoshopped images have been prevalent for some time, these AI systems can alter one’s appearance and voice in real time, making it nearly impossible to differentiate between real and digitally manipulated content.

Final example is the concept of Alpha Persuade, which was discussed in the presentation “The AI Dilemma”. Alpha Persuade combines two elements: the ability to replicate a person’s voice from a five-second recording and the power of AlphaGo, an AI developed by Google that mastered the game of Go by simulating millions of games within a very short period of time. By simulating conversations with the voice of a person’s loved one, Alpha Persuade has the potential to convincingly manipulate individuals into performing actions they would typically find highly unlikely. While Alpha Persuade is not currently available to the public, the theoretical capability exists today.

When exploring platforms like YouTube, Google, or even tools like Bard and Bing, one can discover a plethora of AI tools that can accomplish remarkable tasks. These tools cater to various domains, enabling users to manipulate video, audio, presentations, social media, basically any digital content. The rapid pace of AI development is evident, as new tools emerge on a daily basis.

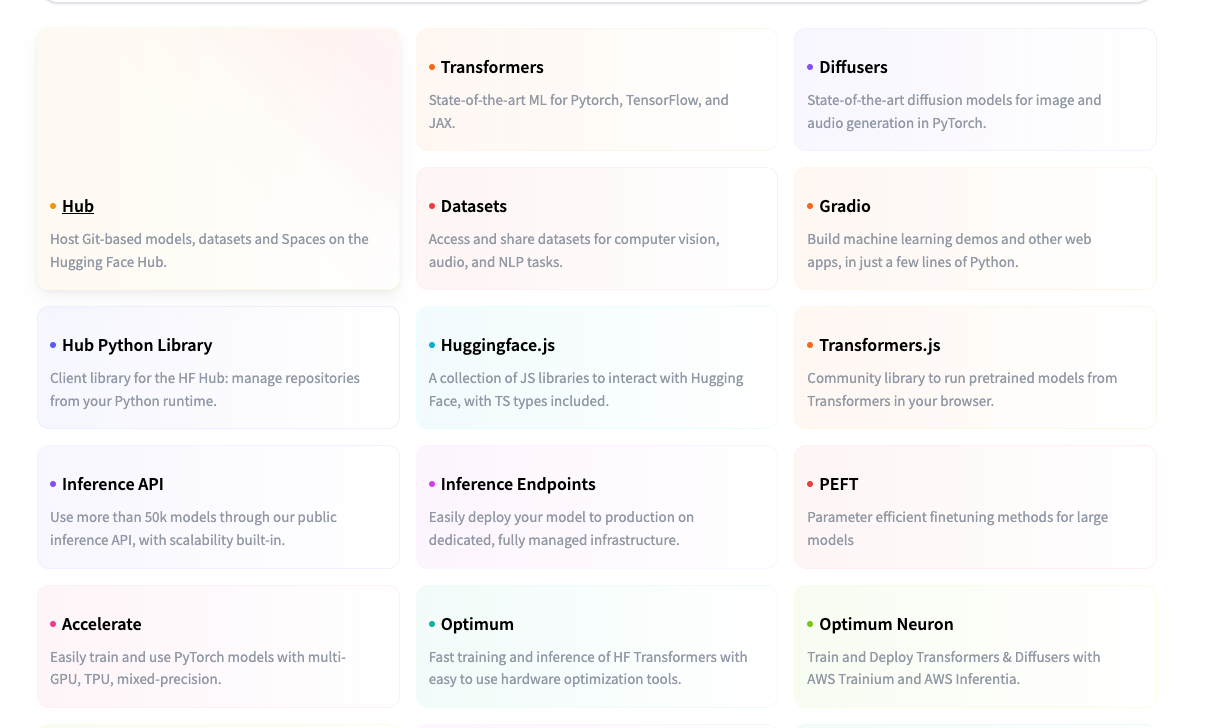

One popular platform for developers is Hugging Face, which offers an ecosystem of AI models designed for specific purposes. Hugging Face offers access to these models, and there is even the option to use the GPT API provided by OpenAI for building applications. While ChatGPT still holds certain advantages, open-source models have emerged in recent months that perform quite similarly. This development showcases how quickly the field is progressing.

GPT stands for “Generative Pre-trained Transformer,” representing the underlying technology used in large language models.

ChatGPT, on the other hand, specifically refers to the GPT model that has been trained on conversations and instructions to complete text prompts.

When developing applications or utilizing language models, users leverage the GPT API and the GPT base model. The GPT base model can be fine-tuned or trained on specific datasets according to the user’s requirements.

Thanks to this we can already see several possibilities to provide certain products or services built upon AIs which are available freely or for a very reasonable fee.

While many agencies and companies can explore all the possibilities and modalities of AI, at Webikon we want to primarily focus on LLM, text-based generative models such as GPT and gradually implement image generative as well as sound capabilities (speech-to-text /text-to-speech).

First of all, GPT allows us to easily create intelligent search on any website. We scrape the content and using embeddings offered by GPT API, we can translate the content into vectors that actually hold semantic meaning.

When a visitor uses search input on the website, we take the query, translate it into a vector as well and then compare it to the vectors stored in database. This essentially gives you a semantic search, based on a meaning, rather than the old fashion simple keyword comparison. So the search engine actually understands what the user is asking for and intelligently offers the results.

First of all, GPT allows us to easily create intelligent search on any website. We scrape the content and using embeddings offered by GPT API, we can translate the content into vectors that actually hold semantic meaning.

When a visitor uses search input on the website, we take the query, translate it into a vector as well and then compare it to the vectors stored in database. This essentially gives you a semantic search, based on a meaning, rather than the old fashion simple keyword comparison. So the search engine actually understands what the user is asking for and intelligently offers the results.

Next logical product GPT allows us to offer is your website’s chatbot. Expanding on the first example of intelligent search, once we have the website’s data converted to vectors and we interpret user queries into vectors and compare them, we can simply enable memory and context capabilities, allowing for a conversation with the system, thus creating intelligent chatbot that knows everything about your website and can have a conversation with you about it.

Going another step further, we can build our own internal intelligent assistant to increase our productivity, provide us with objective feedback and give us new options regarding our processes and projects. This is once again, building upon the previous two examples with more complex data structure and using prompt engineering techniques to have intelligent, knowledgable and relevant assistant – or we could say agent, because thanks to GPT-4, we are getting close to “reasoning” capabilities in these models and we are able to connect these reasoning language models to internet and let them execute script or code by extension. So we are getting past the threshold of passive listener just waiting for you to provide input, towards an active agent that actually thinks and acts.

And last but not least, currently we are exploring possibilities of incorporating image and sound to our processes as well, to build our own voice-controlled AI agent and to speed up the process of app prototyping and designing. Delivering the best quality at much faster rate is the main goal and current AI gives us everything we need in order to achieve that.

AI Developer

I have always enjoyed history, philosophy and languages. Since high school, I added programming languages. What I like about programming is that it constantly pushes you forward, there is always something to improve. And that’s also why ChatGPT, as a big language model, became defacto overnight my new hobby. I’ve also started a facebook group for ChatGPT enthusiasts, where we share news from the world of artificial intelligence, and you can join it too.